[CogSci19] Decomposing Human Causal Learning: Bottom-up Associative Learning and Top-down Schema Reasoning

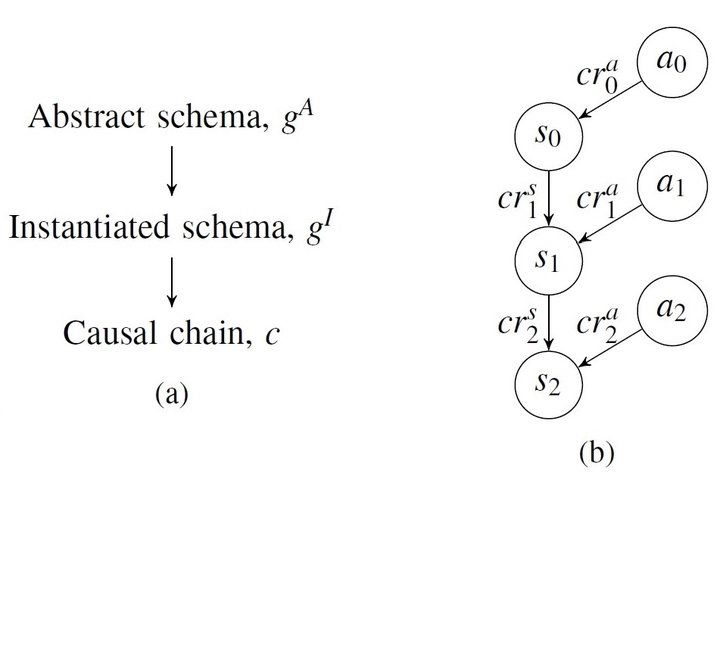

(a) An illustration of hierarchical structure of the model. A bottom-up associative learning theory, $\beta$, and a top-down causal theory, $\gamma$, serve as priors for the rest of the model. The model makes decisions at the causal chain resolution. (b) Atomic causal chain. The chain is composed by a set of subchains, $c_i$, where each $c_i$ is defined by: (i) $a_i$, an action node that can be intervened upon by the agent, (ii) $s_i$, a state node capturing the time-invariant attributes and time-varying fluents of the object, (iii) $cr_i^a$, the causal relation between $a_i$ and $s_i$, and (iv) $cr_i^s$, the causal relation between $s_i$ and and $s_i-1$.

(a) An illustration of hierarchical structure of the model. A bottom-up associative learning theory, $\beta$, and a top-down causal theory, $\gamma$, serve as priors for the rest of the model. The model makes decisions at the causal chain resolution. (b) Atomic causal chain. The chain is composed by a set of subchains, $c_i$, where each $c_i$ is defined by: (i) $a_i$, an action node that can be intervened upon by the agent, (ii) $s_i$, a state node capturing the time-invariant attributes and time-varying fluents of the object, (iii) $cr_i^a$, the causal relation between $a_i$ and $s_i$, and (iv) $cr_i^s$, the causal relation between $s_i$ and and $s_i-1$.Abstract

Transfer learning is fundamental for intelligence; agents expected to operate in novel and unfamiliar environments must be able to transfer previously learned knowledge to new domains or problems. However, knowledge transfer manifests at different levels of representation. The underlying computational mechanisms in support of different types of transfer learning remain unclear. In this paper, we approach the transfer learning challenge by decomposing the underlying computational mechanisms involved in bottom-up associative learning and top-down causal schema induction. We adopt a Bayesian framework to model causal theory induction and use the inferred causal theory to transfer abstract knowledge between similar environments. Specifically, we train a simulated agent to discover and transfer useful relational and abstract knowledge by interactively exploring the problem space and extracting relations from observed low-level attributes. A set of hierarchical causal schema is constructed to determine task structure. Our agent combines causal theories and associative learning to select a sequence of actions most likely to accomplish the task. To evaluate the proposed framework, we compare performances of the simulated agent with human performance in the OpenLock environment, a virtual “escape room” with a complex hierarchy that requires agents to reason about causal structures governing the system. While the simulated agent requires more attempts than human participants, the qualitative trends of transfer in the learning situations are similar between humans and our trained agent. These findings suggest human causal learning in complex, unfamiliar situations may rely on the synergy between bottom-up associative learning and top-down schema reasoning.