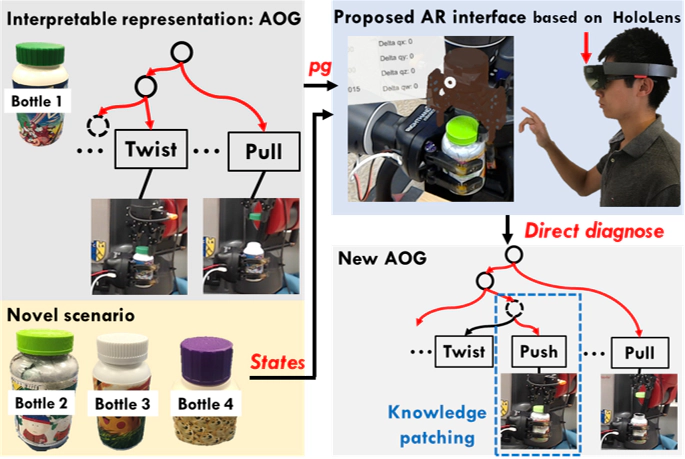

Given a knowledge represented by a T-AOG of opening conventional bottles, the robot tries to open unseen medicine bottles with safety lock. The proposed AR interface can visualize the inner functioning of the robot during action executions. Thus, the user can understand the knowledge structure inside the robot’s mind, directly oversee the entire decision making process through HoloLens in real-time, and finally interactively correct the missing action (push) to open a medicine bottle successfully

Given a knowledge represented by a T-AOG of opening conventional bottles, the robot tries to open unseen medicine bottles with safety lock. The proposed AR interface can visualize the inner functioning of the robot during action executions. Thus, the user can understand the knowledge structure inside the robot’s mind, directly oversee the entire decision making process through HoloLens in real-time, and finally interactively correct the missing action (push) to open a medicine bottle successfullyAbstract

We present a novel Augmented Reality (AR) approach, through Microsoft HoloLens, to address the challenging problems of diagnosing, teaching, and patching interpretable knowledge of a robot. A Temporal And-Or graph (T-AOG) of opening bottles is learned from human demonstration and programmed to the robot. This representation yields a hierarchical structure that captures the compositional nature of the given task, which is highly interpretable for the users. By visualizing the knowledge structure represented by the T-AOG and the decision making process by parsing a T-AOG, the user can intuitively understand what the robot knows, supervise the robot’s action planner, and monitor visually latent robot states (e.g., the force exerted during interactions). Given a new task, through such comprehensive visualizations of robot’s inner functioning, users can quickly identify the reasons of failures, interactively teach the robot with a new action, and patch it to the knowledge structure represented by the T-AOG. In this way, the robot is capable of solving similar but new tasks only through minor modifications provided by the users interactively. This process demonstrates the interpretability of our knowledge representation and the effectiveness of the AR interface.